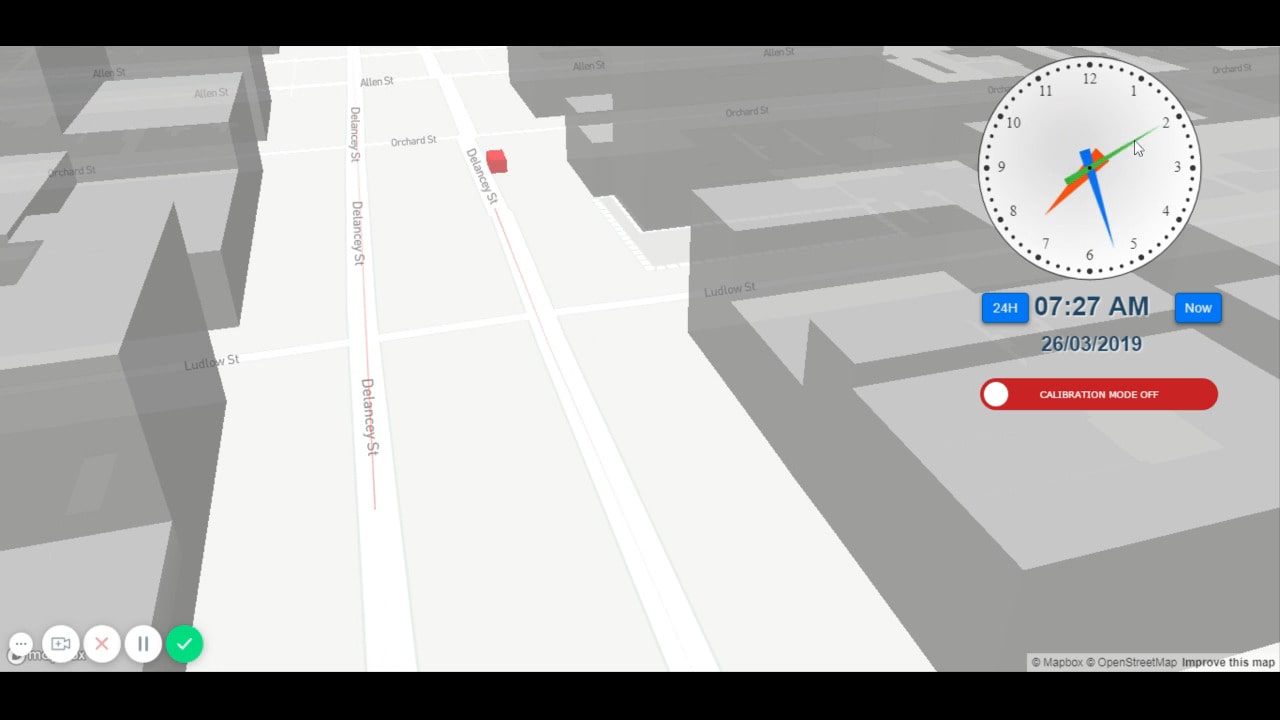

The physical world and the internet are merging. It has been ever since the first webcam gazed upon the office coffee pot. Now with the onset of AR, Sensor Fusion, and AI we have the natural extension of this merging - a digital mirror of our real world (aka 'World Mirrors'). Tesla "Autopilot" is a good introduction to the World Mirror concept - the idea that the car can not collect enough data about the real world to model it effectively but also to have enough insight into the mechanics of the world to start making decisions using the world model it generated. We are getting domain specific instances of this idea in a lot of fields, especially in robotics, where SLAM (Simultaneous Localization & Mapping) is pervasive for anything from quadcopters to humanoid robots. Although SLAM only covers the model building aspect of a World Mirror it is important to point out that once a system has a model of the world AI can then be used to start driving behavior for the robot/drone/car.

Having an agent like a car, drone, or robot model and react to the world is good in all, the true power comes from merging these disparate models into a unified whole. I haven't seen many examples of this yet but there are a couple projects that are starting to explore this idea. Grassland is one example that takes 2D video from any source and runs object detection ML on it to enable distributed tracking fo anything from cars to people. These updates are shared across a p2p network of all the other sensors on the network allowing for a realtime wholistic view of any contributed video stream.